Track notebooks, scripts & functions¶

For tracking pipelines, see: Pipelines – workflow managers.

# pip install lamindb

!lamin init --storage ./test-track

Show code cell output

→ initialized lamindb: testuser1/test-track

Track a notebook or script¶

Call track() to register your notebook or script as a transform and start capturing inputs & outputs of a run.

import lamindb as ln

ln.track() # initiate a tracked notebook/script run

# your code automatically tracks inputs & outputs

ln.finish() # mark run as finished, save execution report, source code & environment

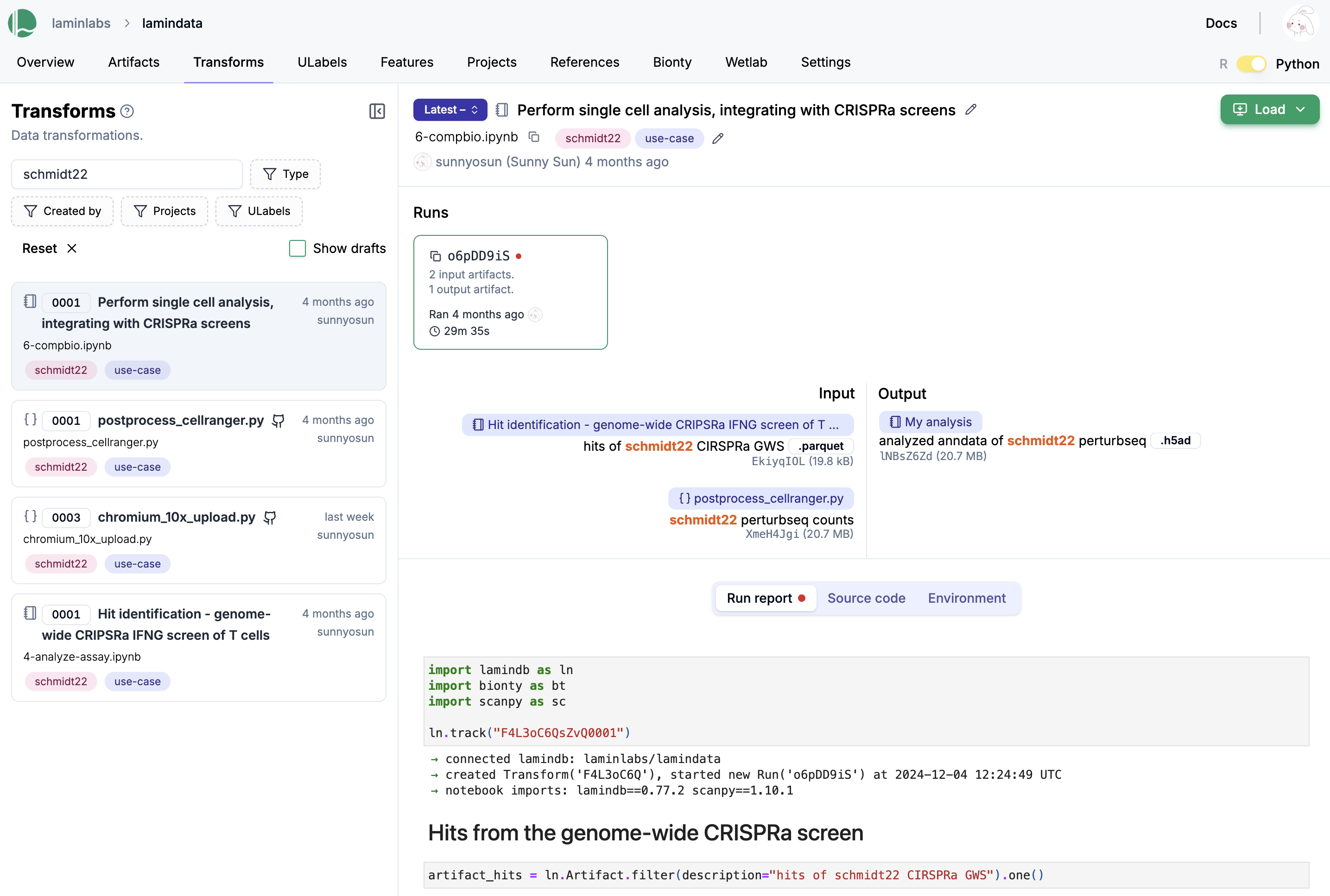

Here is how a notebook with run report looks on the hub.

Explore it here.

You find your notebooks and scripts in the Transform registry (along with pipelines & functions). Run stores executions.

You can use all usual ways of querying to obtain one or multiple transform records, e.g.:

transform = ln.Transform.get(key="my_analyses/my_notebook.ipynb")

transform.source_code # source code

transform.runs # all runs

transform.latest_run.report # report of latest run

transform.latest_run.environment # environment of latest run

To load a notebook or script from the hub, search or filter the transform page and use the CLI.

lamin load https://lamin.ai/laminlabs/lamindata/transform/13VINnFk89PE

Use nested paths¶

If no working directory is set for LaminDB, script & notebooks keys equal their filenames. If you configure a working directory, script & notebooks keys equal the relative path in the working directory.

To set the working directory to your current shell working directory, run:

lamin settings set work-dir .

You can see the current status by running:

lamin info

The default is that no working directory is set.

Use projects¶

You can link the entities created during a run to a project.

import lamindb as ln

my_project = ln.Project(name="My project").save() # create a project

ln.track(project="My project") # auto-link entities to "My project"

ln.Artifact(

ln.examples.datasets.file_fcs(), key="my_file.fcs"

).save() # save an artifact

Show code cell output

→ connected lamindb: testuser1/test-track

→ created Transform('MUdUquLQhLyh0000', key='track.ipynb'), started new Run('I5Ool2E4Y9csOlos') at 2025-10-16 13:19:20 UTC

→ notebook imports: lamindb==1.13.1

• recommendation: to identify the notebook across renames, pass the uid: ln.track("MUdUquLQhLyh", project="My project")

Artifact(uid='tn8M7n0QZnQn7qLT0000', is_latest=True, key='my_file.fcs', suffix='.fcs', size=19330507, hash='rCPvmZB19xs4zHZ7p_-Wrg', branch_id=1, space_id=1, storage_id=1, run_id=1, created_by_id=1, created_at=2025-10-16 13:19:25 UTC, is_locked=False)

Filter entities by project, e.g., artifacts:

ln.Artifact.filter(projects=my_project).to_dataframe()

Show code cell output

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 1 | tn8M7n0QZnQn7qLT0000 | my_file.fcs | None | .fcs | None | None | 19330507 | rCPvmZB19xs4zHZ7p_-Wrg | None | None | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:25.953000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

Access entities linked to a project.

display(my_project.artifacts.to_dataframe())

display(my_project.transforms.to_dataframe())

display(my_project.runs.to_dataframe())

Show code cell output

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 1 | tn8M7n0QZnQn7qLT0000 | my_file.fcs | None | .fcs | None | None | 19330507 | rCPvmZB19xs4zHZ7p_-Wrg | None | None | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:25.953000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| uid | key | description | type | source_code | hash | reference | reference_type | space_id | _template_id | version | is_latest | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 1 | MUdUquLQhLyh0000 | track.ipynb | Track notebooks, scripts & functions | notebook | None | None | None | None | 1 | None | None | True | False | 2025-10-16 13:19:20.537000+00:00 | 1 | None | 1 |

| uid | name | started_at | finished_at | params | reference | reference_type | _is_consecutive | _status_code | space_id | transform_id | report_id | _logfile_id | environment_id | initiated_by_run_id | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 1 | I5Ool2E4Y9csOlos | None | 2025-10-16 13:19:20.547205+00:00 | None | None | None | None | None | -1 | 1 | 1 | None | None | None | None | False | 2025-10-16 13:19:20.548000+00:00 | 1 | None | 1 |

Use spaces¶

You can write the entities created during a run into a space that you configure on LaminHub. This is particularly useful if you want to restrict access to a space. Note that this doesn’t affect bionty entities who should typically be commonly accessible.

ln.track(space="Our team space")

Track parameters¶

In addition to tracking source code, run reports & environments, you can track run parameters.

Track run parameters¶

Let’s look at the following script, which has a few parameters.

import argparse

import lamindb as ln

if __name__ == "__main__":

p = argparse.ArgumentParser()

p.add_argument("--input-dir", type=str)

p.add_argument("--downsample", action="store_true")

p.add_argument("--learning-rate", type=float)

args = p.parse_args()

params = {

"input_dir": args.input_dir,

"learning_rate": args.learning_rate,

"preprocess_params": {

"downsample": args.downsample,

"normalization": "the_good_one",

},

}

ln.track(params=params)

# your code

ln.finish()

Run the script.

!python scripts/run_track_with_params.py --input-dir ./mydataset --learning-rate 0.01 --downsample

Show code cell output

→ connected lamindb: testuser1/test-track

→ created Transform('s6TQmexGMTBc0000', key='run_track_with_params.py'), started new Run('HI5EsMupjY3Fhx86') at 2025-10-16 13:19:29 UTC

→ params: input_dir=./mydataset, learning_rate=0.01, preprocess_params={'downsample': True, 'normalization': 'the_good_one'}

• recommendation: to identify the script across renames, pass the uid: ln.track("s6TQmexGMTBc", params={...})

Query by run parameters¶

Query for all runs that match a certain parameters:

import lamindb as ln

ln.Run.filter(

params__learning_rate=0.01,

params__input_dir="./mydataset",

params__preprocess_params__downsample=True,

).to_dataframe()

Show code cell output

| uid | name | started_at | finished_at | params | reference | reference_type | _is_consecutive | _status_code | space_id | transform_id | report_id | _logfile_id | environment_id | initiated_by_run_id | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 2 | HI5EsMupjY3Fhx86 | None | 2025-10-16 13:19:29.134250+00:00 | 2025-10-16 13:19:30.414785+00:00 | {'input_dir': './mydataset', 'learning_rate': ... | None | None | True | 0 | 1 | 2 | 3 | None | 2 | None | False | 2025-10-16 13:19:29.135000+00:00 | 1 | None | 1 |

Note that preprocess_params__downsample=True traverses the dictionary preprocess_params to find the key "downsample" and match it to True.

Access parameters of a run¶

Below is how you get the parameter values that were used for a given run.

run = ln.Run.filter(params__learning_rate=0.01).order_by("-started_at").first()

run.params

Show code cell output

{'input_dir': './mydataset',

'learning_rate': 0.01,

'preprocess_params': {'downsample': True, 'normalization': 'the_good_one'}}

Track functions¶

If you want more-fined-grained data lineage tracking, use the tracked() decorator.

@ln.tracked()

def subset_dataframe(

input_artifact_key: str,

output_artifact_key: str,

subset_rows: int = 2,

subset_cols: int = 2,

) -> None:

artifact = ln.Artifact.get(key=input_artifact_key)

dataset = artifact.load()

new_data = dataset.iloc[:subset_rows, :subset_cols]

ln.Artifact.from_dataframe(new_data, key=output_artifact_key).save()

Prepare a test dataset:

df = ln.examples.datasets.mini_immuno.get_dataset1(otype="DataFrame")

input_artifact_key = "my_analysis/dataset.parquet"

artifact = ln.Artifact.from_dataframe(df, key=input_artifact_key).save()

Run the function with default params:

ouput_artifact_key = input_artifact_key.replace(".parquet", "_subsetted.parquet")

subset_dataframe(input_artifact_key, ouput_artifact_key)

Query for the output:

subsetted_artifact = ln.Artifact.get(key=ouput_artifact_key)

subsetted_artifact.view_lineage()

This is the run that created the subsetted_artifact:

subsetted_artifact.run

Run(uid='BbfyvFkP2kndXvyO', started_at=2025-10-16 13:19:31 UTC, finished_at=2025-10-16 13:19:31 UTC, params={'input_artifact_key': 'my_analysis/dataset.parquet', 'output_artifact_key': 'my_analysis/dataset_subsetted.parquet', 'subset_rows': 2, 'subset_cols': 2}, branch_id=1, space_id=1, transform_id=3, created_by_id=1, initiated_by_run_id=1, created_at=2025-10-16 13:19:31 UTC, is_locked=False)

This is the function that created it:

subsetted_artifact.run.transform

Transform(uid='cZ3CfclU5GWZ0000', is_latest=True, key='track.ipynb/subset_dataframe.py', type='function', hash='CUqkJpolJY1Q1tqyCoWIWg', branch_id=1, space_id=1, created_by_id=1, created_at=2025-10-16 13:19:31 UTC, is_locked=False)

This is the source code of this function:

subsetted_artifact.run.transform.source_code

'@ln.tracked()\ndef subset_dataframe(\n input_artifact_key: str,\n output_artifact_key: str,\n subset_rows: int = 2,\n subset_cols: int = 2,\n) -> None:\n artifact = ln.Artifact.get(key=input_artifact_key)\n dataset = artifact.load()\n new_data = dataset.iloc[:subset_rows, :subset_cols]\n ln.Artifact.from_dataframe(new_data, key=output_artifact_key).save()\n'

These are all versions of this function:

subsetted_artifact.run.transform.versions.to_dataframe()

| uid | key | description | type | source_code | hash | reference | reference_type | space_id | _template_id | version | is_latest | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 3 | cZ3CfclU5GWZ0000 | track.ipynb/subset_dataframe.py | None | function | @ln.tracked()\ndef subset_dataframe(\n inpu... | CUqkJpolJY1Q1tqyCoWIWg | None | None | 1 | None | None | True | False | 2025-10-16 13:19:31.040000+00:00 | 1 | None | 1 |

This is the initating run that triggered the function call:

subsetted_artifact.run.initiated_by_run

Run(uid='I5Ool2E4Y9csOlos', started_at=2025-10-16 13:19:20 UTC, branch_id=1, space_id=1, transform_id=1, created_by_id=1, created_at=2025-10-16 13:19:20 UTC, is_locked=False)

This is the transform of the initiating run:

subsetted_artifact.run.initiated_by_run.transform

Transform(uid='MUdUquLQhLyh0000', is_latest=True, key='track.ipynb', description='Track notebooks, scripts & functions', type='notebook', branch_id=1, space_id=1, created_by_id=1, created_at=2025-10-16 13:19:20 UTC, is_locked=False)

These are the parameters of the run:

subsetted_artifact.run.params

{'input_artifact_key': 'my_analysis/dataset.parquet',

'output_artifact_key': 'my_analysis/dataset_subsetted.parquet',

'subset_rows': 2,

'subset_cols': 2}

These are the input artifacts:

subsetted_artifact.run.input_artifacts.to_dataframe()

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 4 | g1I5yA7nbdpSDTAz0000 | my_analysis/dataset.parquet | None | .parquet | dataset | DataFrame | 9868 | wvfEBPwHL3XHiAb-o8fU6Q | None | 3 | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:31.013000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

These are output artifacts:

subsetted_artifact.run.output_artifacts.to_dataframe()

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 5 | Vft4wxzeOKEoX9GX0000 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3238 | UM8d9C-x_2fbc_46BScp8A | None | 2 | md5 | True | False | 1 | 1 | None | None | True | False | 3 | 2025-10-16 13:19:31.066000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

Re-run the function with a different parameter:

subsetted_artifact = subset_dataframe(

input_artifact_key, ouput_artifact_key, subset_cols=3

)

subsetted_artifact = ln.Artifact.get(key=ouput_artifact_key)

subsetted_artifact.view_lineage()

Show code cell output

→ creating new artifact version for key='my_analysis/dataset_subsetted.parquet' (storage: '/home/runner/work/lamindb/lamindb/docs/test-track')

We created a new run:

subsetted_artifact.run

Run(uid='M3MRl3o07mu4JaV8', started_at=2025-10-16 13:19:32 UTC, finished_at=2025-10-16 13:19:32 UTC, params={'input_artifact_key': 'my_analysis/dataset.parquet', 'output_artifact_key': 'my_analysis/dataset_subsetted.parquet', 'subset_rows': 2, 'subset_cols': 3}, branch_id=1, space_id=1, transform_id=3, created_by_id=1, initiated_by_run_id=1, created_at=2025-10-16 13:19:32 UTC, is_locked=False)

With new parameters:

subsetted_artifact.run.params

{'input_artifact_key': 'my_analysis/dataset.parquet',

'output_artifact_key': 'my_analysis/dataset_subsetted.parquet',

'subset_rows': 2,

'subset_cols': 3}

And a new version of the output artifact:

subsetted_artifact.run.output_artifacts.to_dataframe()

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 6 | Vft4wxzeOKEoX9GX0001 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3852 | 7WGuLVamVyBMhPb2qRE_tA | None | 2 | md5 | True | False | 1 | 1 | None | None | True | False | 4 | 2025-10-16 13:19:32.488000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

See the state of the database:

ln.view()

Show code cell output

Artifact

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 1 | tn8M7n0QZnQn7qLT0000 | my_file.fcs | None | .fcs | None | None | 19330507 | rCPvmZB19xs4zHZ7p_-Wrg | None | NaN | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:25.953000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 4 | g1I5yA7nbdpSDTAz0000 | my_analysis/dataset.parquet | None | .parquet | dataset | DataFrame | 9868 | wvfEBPwHL3XHiAb-o8fU6Q | None | 3.0 | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:31.013000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 5 | Vft4wxzeOKEoX9GX0000 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3238 | UM8d9C-x_2fbc_46BScp8A | None | 2.0 | md5 | True | False | 1 | 1 | None | None | False | False | 3 | 2025-10-16 13:19:31.066000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 6 | Vft4wxzeOKEoX9GX0001 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3852 | 7WGuLVamVyBMhPb2qRE_tA | None | 2.0 | md5 | True | False | 1 | 1 | None | None | True | False | 4 | 2025-10-16 13:19:32.488000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

Project

| uid | name | description | is_type | abbr | url | start_date | end_date | _status_code | space_id | type_id | is_locked | run_id | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 1 | b20yzsJzsS7G | My project | None | False | None | None | None | None | 0 | 1 | None | False | None | 2025-10-16 13:19:19.262000+00:00 | 1 | None | 1 |

Run

| uid | name | started_at | finished_at | params | reference | reference_type | _is_consecutive | _status_code | space_id | transform_id | report_id | _logfile_id | environment_id | initiated_by_run_id | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 1 | I5Ool2E4Y9csOlos | None | 2025-10-16 13:19:20.547205+00:00 | NaT | None | None | None | None | -1 | 1 | 1 | NaN | None | NaN | NaN | False | 2025-10-16 13:19:20.548000+00:00 | 1 | None | 1 |

| 2 | HI5EsMupjY3Fhx86 | None | 2025-10-16 13:19:29.134250+00:00 | 2025-10-16 13:19:30.414785+00:00 | {'input_dir': './mydataset', 'learning_rate': ... | None | None | True | 0 | 1 | 2 | 3.0 | None | 2.0 | NaN | False | 2025-10-16 13:19:29.135000+00:00 | 1 | None | 1 |

| 3 | BbfyvFkP2kndXvyO | None | 2025-10-16 13:19:31.044637+00:00 | 2025-10-16 13:19:31.075403+00:00 | {'input_artifact_key': 'my_analysis/dataset.pa... | None | None | None | 0 | 1 | 3 | NaN | None | NaN | 1.0 | False | 2025-10-16 13:19:31.045000+00:00 | 1 | None | 1 |

| 4 | M3MRl3o07mu4JaV8 | None | 2025-10-16 13:19:32.464498+00:00 | 2025-10-16 13:19:32.497155+00:00 | {'input_artifact_key': 'my_analysis/dataset.pa... | None | None | None | 0 | 1 | 3 | NaN | None | NaN | 1.0 | False | 2025-10-16 13:19:32.465000+00:00 | 1 | None | 1 |

Storage

| uid | root | description | type | region | instance_uid | space_id | is_locked | run_id | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||

| 1 | 2vQ99VsjaU1N | /home/runner/work/lamindb/lamindb/docs/test-track | None | local | None | 73KPGC58ahU9 | 1 | False | None | 2025-10-16 13:19:15.693000+00:00 | 1 | None | 1 |

Transform

| uid | key | description | type | source_code | hash | reference | reference_type | space_id | _template_id | version | is_latest | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 1 | MUdUquLQhLyh0000 | track.ipynb | Track notebooks, scripts & functions | notebook | None | None | None | None | 1 | None | None | True | False | 2025-10-16 13:19:20.537000+00:00 | 1 | None | 1 |

| 2 | s6TQmexGMTBc0000 | run_track_with_params.py | None | script | import argparse\nimport lamindb as ln\n\nif __... | 5RBz7zJICeKE1OSmg7gEdQ | None | None | 1 | None | None | True | False | 2025-10-16 13:19:29.131000+00:00 | 1 | None | 1 |

| 3 | cZ3CfclU5GWZ0000 | track.ipynb/subset_dataframe.py | None | function | @ln.tracked()\ndef subset_dataframe(\n inpu... | CUqkJpolJY1Q1tqyCoWIWg | None | None | 1 | None | None | True | False | 2025-10-16 13:19:31.040000+00:00 | 1 | None | 1 |

In a script¶

import argparse

import lamindb as ln

@ln.tracked()

def subset_dataframe(

artifact: ln.Artifact,

subset_rows: int = 2,

subset_cols: int = 2,

run: ln.Run | None = None,

) -> ln.Artifact:

dataset = artifact.load(is_run_input=run)

new_data = dataset.iloc[:subset_rows, :subset_cols]

new_key = artifact.key.replace(".parquet", "_subsetted.parquet")

return ln.Artifact.from_dataframe(new_data, key=new_key, run=run).save()

if __name__ == "__main__":

p = argparse.ArgumentParser()

p.add_argument("--subset", action="store_true")

args = p.parse_args()

params = {"is_subset": args.subset}

ln.track(params=params)

if args.subset:

df = ln.examples.datasets.mini_immuno.get_dataset1(otype="DataFrame")

artifact = ln.Artifact.from_dataframe(

df, key="my_analysis/dataset.parquet"

).save()

subsetted_artifact = subset_dataframe(artifact)

ln.finish()

!python scripts/run_workflow.py --subset

Show code cell output

→ connected lamindb: testuser1/test-track

→ created Transform('sFydgjof7Lie0000', key='run_workflow.py'), started new Run('MA2eubYwRDmdMQ7z') at 2025-10-16 13:19:35 UTC

→ params: is_subset=True

• recommendation: to identify the script across renames, pass the uid: ln.track("sFydgjof7Lie", params={...})

→ returning artifact with same hash: Artifact(uid='g1I5yA7nbdpSDTAz0000', is_latest=True, key='my_analysis/dataset.parquet', suffix='.parquet', kind='dataset', otype='DataFrame', size=9868, hash='wvfEBPwHL3XHiAb-o8fU6Q', n_observations=3, branch_id=1, space_id=1, storage_id=1, run_id=1, created_by_id=1, created_at=2025-10-16 13:19:31 UTC, is_locked=False); to track this artifact as an input, use: ln.Artifact.get()

! cannot infer feature type of: None, returning '?

! skipping param run because dtype not JSON serializable

→ returning artifact with same hash: Artifact(uid='Vft4wxzeOKEoX9GX0001', is_latest=True, key='my_analysis/dataset_subsetted.parquet', suffix='.parquet', kind='dataset', otype='DataFrame', size=3852, hash='7WGuLVamVyBMhPb2qRE_tA', n_observations=2, branch_id=1, space_id=1, storage_id=1, run_id=4, created_by_id=1, created_at=2025-10-16 13:19:32 UTC, is_locked=False); to track this artifact as an input, use: ln.Artifact.get()

→ returning artifact with same hash: Artifact(uid='L0ST0mguEK5zWSbc0000', is_latest=True, description='log streams of run HI5EsMupjY3Fhx86', suffix='.txt', kind='__lamindb_run__', size=0, hash='1B2M2Y8AsgTpgAmY7PhCfg', branch_id=1, space_id=1, storage_id=1, created_by_id=1, created_at=2025-10-16 13:19:30 UTC, is_locked=False); to track this artifact as an input, use: ln.Artifact.get()

! updated description from log streams of run HI5EsMupjY3Fhx86 to log streams of run MA2eubYwRDmdMQ7z

ln.view()

Show code cell output

Artifact

| uid | key | description | suffix | kind | otype | size | hash | n_files | n_observations | _hash_type | _key_is_virtual | _overwrite_versions | space_id | storage_id | schema_id | version | is_latest | is_locked | run_id | created_at | created_by_id | _aux | _real_key | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||||||||||

| 1 | tn8M7n0QZnQn7qLT0000 | my_file.fcs | None | .fcs | None | None | 19330507 | rCPvmZB19xs4zHZ7p_-Wrg | None | NaN | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:25.953000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 4 | g1I5yA7nbdpSDTAz0000 | my_analysis/dataset.parquet | None | .parquet | dataset | DataFrame | 9868 | wvfEBPwHL3XHiAb-o8fU6Q | None | 3.0 | md5 | True | False | 1 | 1 | None | None | True | False | 1 | 2025-10-16 13:19:31.013000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 5 | Vft4wxzeOKEoX9GX0000 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3238 | UM8d9C-x_2fbc_46BScp8A | None | 2.0 | md5 | True | False | 1 | 1 | None | None | False | False | 3 | 2025-10-16 13:19:31.066000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

| 6 | Vft4wxzeOKEoX9GX0001 | my_analysis/dataset_subsetted.parquet | None | .parquet | dataset | DataFrame | 3852 | 7WGuLVamVyBMhPb2qRE_tA | None | 2.0 | md5 | True | False | 1 | 1 | None | None | True | False | 4 | 2025-10-16 13:19:32.488000+00:00 | 1 | {'af': {'0': True}} | None | 1 |

Project

| uid | name | description | is_type | abbr | url | start_date | end_date | _status_code | space_id | type_id | is_locked | run_id | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 1 | b20yzsJzsS7G | My project | None | False | None | None | None | None | 0 | 1 | None | False | None | 2025-10-16 13:19:19.262000+00:00 | 1 | None | 1 |

Run

| uid | name | started_at | finished_at | params | reference | reference_type | _is_consecutive | _status_code | space_id | transform_id | report_id | _logfile_id | environment_id | initiated_by_run_id | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | ||||||||||||||||||||

| 1 | I5Ool2E4Y9csOlos | None | 2025-10-16 13:19:20.547205+00:00 | NaT | None | None | None | None | -1 | 1 | 1 | NaN | None | NaN | NaN | False | 2025-10-16 13:19:20.548000+00:00 | 1 | None | 1 |

| 2 | HI5EsMupjY3Fhx86 | None | 2025-10-16 13:19:29.134250+00:00 | 2025-10-16 13:19:30.414785+00:00 | {'input_dir': './mydataset', 'learning_rate': ... | None | None | True | 0 | 1 | 2 | 3.0 | None | 2.0 | NaN | False | 2025-10-16 13:19:29.135000+00:00 | 1 | None | 1 |

| 3 | BbfyvFkP2kndXvyO | None | 2025-10-16 13:19:31.044637+00:00 | 2025-10-16 13:19:31.075403+00:00 | {'input_artifact_key': 'my_analysis/dataset.pa... | None | None | None | 0 | 1 | 3 | NaN | None | NaN | 1.0 | False | 2025-10-16 13:19:31.045000+00:00 | 1 | None | 1 |

| 4 | M3MRl3o07mu4JaV8 | None | 2025-10-16 13:19:32.464498+00:00 | 2025-10-16 13:19:32.497155+00:00 | {'input_artifact_key': 'my_analysis/dataset.pa... | None | None | None | 0 | 1 | 3 | NaN | None | NaN | 1.0 | False | 2025-10-16 13:19:32.465000+00:00 | 1 | None | 1 |

| 5 | MA2eubYwRDmdMQ7z | None | 2025-10-16 13:19:35.737513+00:00 | 2025-10-16 13:19:37.132374+00:00 | {'is_subset': True} | None | None | True | 0 | 1 | 4 | 3.0 | None | 2.0 | NaN | False | 2025-10-16 13:19:35.738000+00:00 | 1 | None | 1 |

| 6 | HNhmBbHqWUe4TxMB | None | 2025-10-16 13:19:37.106117+00:00 | 2025-10-16 13:19:37.129787+00:00 | {'artifact': 'Artifact[g1I5yA7nbdpSDTAz0000]',... | None | None | None | 0 | 1 | 5 | NaN | None | NaN | 5.0 | False | 2025-10-16 13:19:37.106000+00:00 | 1 | None | 1 |

Storage

| uid | root | description | type | region | instance_uid | space_id | is_locked | run_id | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||

| 1 | 2vQ99VsjaU1N | /home/runner/work/lamindb/lamindb/docs/test-track | None | local | None | 73KPGC58ahU9 | 1 | False | None | 2025-10-16 13:19:15.693000+00:00 | 1 | None | 1 |

Transform

| uid | key | description | type | source_code | hash | reference | reference_type | space_id | _template_id | version | is_latest | is_locked | created_at | created_by_id | _aux | branch_id | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | |||||||||||||||||

| 1 | MUdUquLQhLyh0000 | track.ipynb | Track notebooks, scripts & functions | notebook | None | None | None | None | 1 | None | None | True | False | 2025-10-16 13:19:20.537000+00:00 | 1 | None | 1 |

| 2 | s6TQmexGMTBc0000 | run_track_with_params.py | None | script | import argparse\nimport lamindb as ln\n\nif __... | 5RBz7zJICeKE1OSmg7gEdQ | None | None | 1 | None | None | True | False | 2025-10-16 13:19:29.131000+00:00 | 1 | None | 1 |

| 3 | cZ3CfclU5GWZ0000 | track.ipynb/subset_dataframe.py | None | function | @ln.tracked()\ndef subset_dataframe(\n inpu... | CUqkJpolJY1Q1tqyCoWIWg | None | None | 1 | None | None | True | False | 2025-10-16 13:19:31.040000+00:00 | 1 | None | 1 |

| 4 | sFydgjof7Lie0000 | run_workflow.py | None | script | import argparse\nimport lamindb as ln\n\n\n@ln... | fwij4oyLV27mmm9f2GVY_A | None | None | 1 | None | None | True | False | 2025-10-16 13:19:35.735000+00:00 | 1 | None | 1 |

| 5 | 88muOREZ3pVI0000 | run_workflow.py/subset_dataframe.py | None | function | @ln.tracked()\ndef subset_dataframe(\n arti... | 9NYMDP5l5Iuu9F8VrO3vWQ | None | None | 1 | None | None | True | False | 2025-10-16 13:19:37.104000+00:00 | 1 | None | 1 |

Sync scripts with git¶

To sync with your git commit, add the following line to your script:

ln.settings.sync_git_repo = <YOUR-GIT-REPO-URL>

import lamindb as ln

ln.settings.sync_git_repo = "https://github.com/..."

ln.track()

# your code

ln.finish()

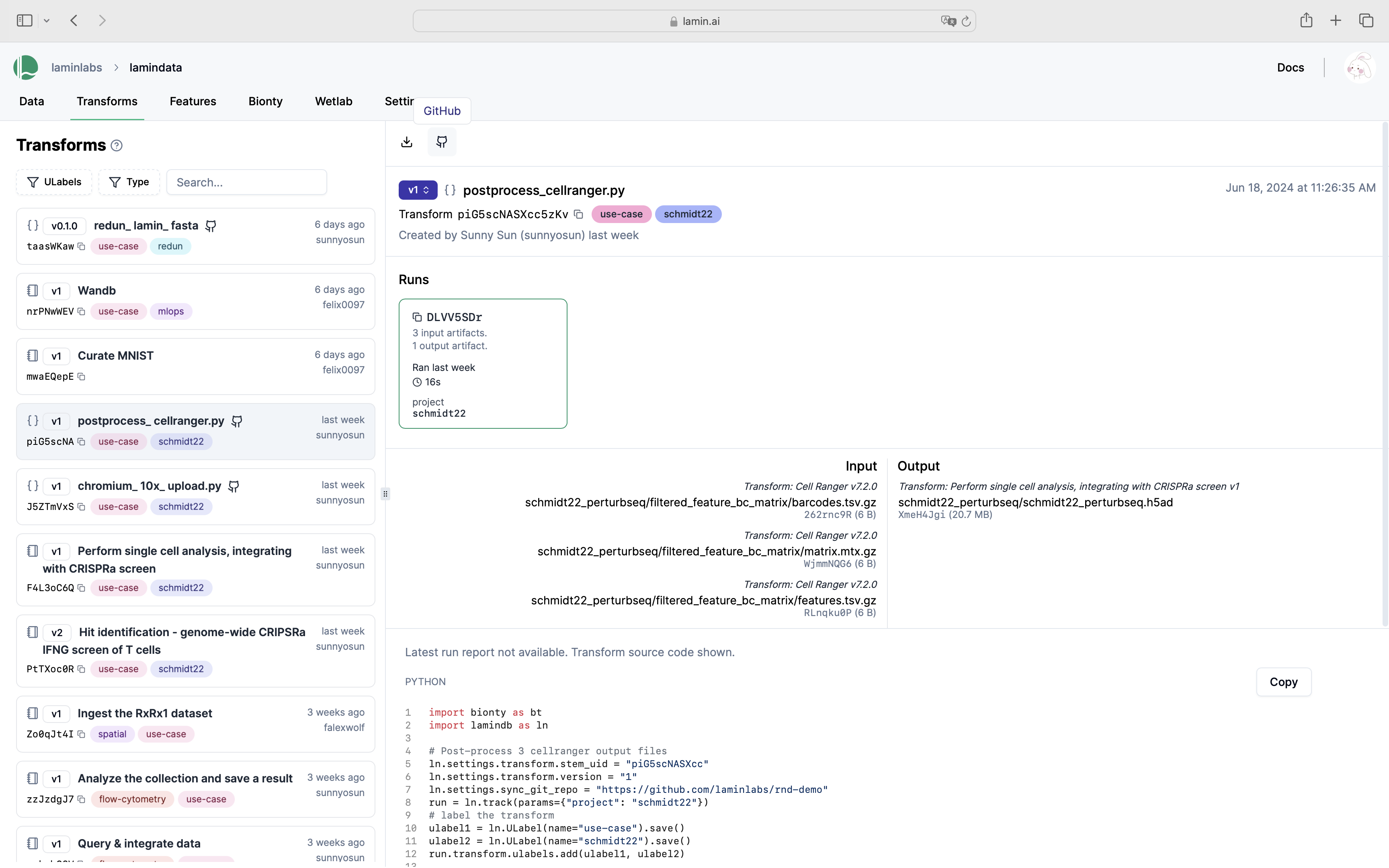

You’ll now see the GitHub emoji clickable on the hub.

Manage notebook templates¶

A notebook acts like a template upon using lamin load to load it. Consider you run:

lamin load https://lamin.ai/account/instance/transform/Akd7gx7Y9oVO0000

Upon running the returned notebook, you’ll automatically create a new version and be able to browse it via the version dropdown on the UI.

Additionally, you can:

label using

Record, e.g.,transform.records.add(template_label)tag with an indicative

versionstring, e.g.,transform.version = "T1"; transform.save()

Saving a notebook as an artifact

Sometimes you might want to save a notebook as an artifact. This is how you can do it:

lamin save template1.ipynb --key templates/template1.ipynb --description "Template for analysis type 1" --registry artifact

A few checks at the end of this notebook:

assert run.params == {

"input_dir": "./mydataset",

"learning_rate": 0.01,

"preprocess_params": {"downsample": True, "normalization": "the_good_one"},

}

assert my_project.artifacts.exists()

assert my_project.transforms.exists()

assert my_project.runs.exists()